9. One-Sided Communication

One-sided communication is a communication paradigm that allows processes to directly access and update the memory of other processes without explicit coordination. It is also called Remote Memory Access (RMA).

9.1. Window Allocation

The target processes specifies a window of its memory, and the origin processes can perform operations like putting data into that window, getting data from that window, or accumulating data in that window. A process can be target and origin at the same time within a window.

To enable one-sided communication, an MPI_Win needs to be created.

Typically, this is accomplished using the MPI_Win_create or MPI_Win_allocate functions.

MPI_Win_create:

Creating a window of shared memory, associated with the communicator comm.

int MPI_Win_create(void *base,

MPI_Aint size,

int disp_unit,

MPI_Info info,

MPI_Comm comm,

MPI_Win *win);

base: It is the starting address of the window in the calling process’s address space. This is where the window begins.size: It represents the size of the window. It determines the extent of the memory associated with the window.disp_unit: This argument specifies the unit of displacement for addressing within the window. It is typically the size of the basic data type on which the displacement is based.info: An info object specifying window creation hints. This argument allows you to provide additional information or hints to control the behavior of window creation.comm: It is the communicator associated with the window. The window is created in the context of this communicator.win: This is the resulting window object. It is a handle to the created window, and you will use this handle to refer to the window in subsequent MPI operations.

MPI_Win_Free:

After the window has been used, it should be freed using MPI_Win_free:

MPI_Win_free(win);

9.2. Remote Memory Access

In MPI you can use one-sided communication to put data into a window MPI_Put , get data from a window MPI_Get, or accumulating data in a window MPI_Accumulate.

MPI_Put

Copies data from a local memory buffer to a remote memory window.

int MPI_Put(const void *origin_addr,

int origin_count,

MPI_Datatype origin_datatype,

int target_rank,

MPI_Aint target_disp,

int target_count,

MPI_Datatype target_datatype,

MPI_Win win);

origin_addr: A pointer to the starting address of the data in the memory of the origin process. This is the data to be copied to the target process.origin_count: The number of data elements to be sent from the origin process. It specifies how many elements of the specifiedorigin_datatypeare to be sent.origin_datatype: The datatype of each element in theorigin_addrbuffer. It defines the interpretation of the data in the origin buffer.target_rank: The rank of the target process in the communicator associated with the windowwin. This specifies the process whose memory will receive the data.target_disp: The displacement (in bytes) within the target process’s memory windowwinwhere the data will be copied. It’s the offset from the beginning of the window.target_count: The number of data elements to be received by the target process. It specifies how many elements of the specifiedtarget_datatypeare expected to be received.target_datatype: The datatype of each element in the target buffer. It defines the interpretation of the data in the target buffer.win: The MPI window object that represents a region of memory. It specifies the memory that can be accessed using one-sided communication operations.

MPI_Get

Copies data from a remote memory window to a local memory buffer.

int MPI_Get(void *origin_addr,

int origin_count,

MPI_Datatype origin_datatype,

int target_rank,

MPI_Aint target_disp,

int target_count,

MPI_Datatype target_datatype,

MPI_Win win);

MPI_Accumulate

Combines data from a local buffer with the data in a remote memory window using an associative and commutative operation.

int MPI_Accumulate(const void *origin_addr,

int origin_count,

MPI_Datatype origin_datatype,

int target_rank,

MPI_Aint target_disp,

int target_count,

MPI_Datatype target_datatype,

MPI_Op op, MPI_Win win);

In the one-sided communication paracdigm, three different synchronization mechanisms (p.588) are used. one of them is active target synchronization and the following MPI function is used for this kind of synchronization:

MPI_Win_fence:

An access epoch at an origin process or an exposure epoch at a target process is opened and closed by calls to MPI_WIN_FENCE. An origin process can access windows at all target processes in the group during such an access epoch, and the local window can be accessed by all MPI processes in the group during such an exposure epoch.

MPI_Win_fence(int assert, MPI_Win win);

Task

Reduction with one-sided communication

Create three different implementations of a sum-reduction operation with one-sided communication.

Use

MPI_Putfor the first implementation,MPI_Getfor the second implementation andMPI_Accumulatefor the last implementation.Execute your implementations and demonstrate their functionality.

9.3. Mandelbrot Set

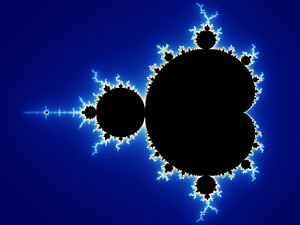

The Mandelbrot-Set is the set of all complex numbers, where the complex numbers do not diverge to infinity for the function \(f_c(z) = z^2 + c\) with start at \(z=0\). The set can be represented by converting the pixel coordinates of an image into complex numbers and applying the given series.

Fig. 9.3.1 Visualization of the Mandelbrot Set. The pixels in the black area, converted to complex numbers, belong to the set.

The example code for the computation of one pixel is:

int calcPixel(double real, double imag, int limit = 100) {

double zReal = real;

double zImag = imag;

for (int i = 0; i < limit; ++i) {

double r2 = zReal * zReal;

double i2 = zImag * zImag;

if (r2 + i2 > 4.0) return i;

zImag = 2.0 * zReal * zImag + imag;

zReal = r2 - i2 + real;

}

return limit;

}

The pixels must be scaled beforehand:

// Scale factor

double dx = (real_max - real_min) / (width - 1);

double dy = (imag_max - imag_min) / (height - 1);

// complex number(in loop)

double real = real_min + x * dx;

double imag = imag_min + y * dy;

Task

Mandelbrot-Set with MPI

Implement C++-Code which generates an Image of the Mandelbrot Set using the MPI-Window and General Active Target Synchronization

The root process draws the picture and the other processes compute parts of the picture(e.g. part of the lines) and send this parts to the root process. The root process starts drawing the picture after receiving all data.

Use real_min=-2.0 real_ax=1.0 imag_min=-1.0 imag_max=1.0 to scale the pixels

Use maxIterations=1000

Use the color scheme of your choice, but the Mandelbrot-Set must be recognizable

Use the Resolution 1920x1080 as minimum

Save the picture as Portable Pixel Map(.ppm). This format can be used without an additional library. See PPM Specification

Use the Magic Number P3

The .ppm-File can be created with:

std::ofstream outFile("mandelbrot.ppm");

outFile << "P3" << std::endl;

outFile << width << std::endl;

outFile << height << std::endl;

outFile << "255" << std::endl;

for (int y = 0; y < height; ++y) {

for (int x = 0; x < width; ++x) {

// generate pixel from result[x, y]

outFile << pixel[0] << " " << pixel[1] << " " << pixel[2] <<" ";

}

outFile << std::endl;

}

outFile.close();